Natural language understanding (NLU) by the machine is of large scientific, economic and social value. It has important applications in human-machine interaction (e.g. voice assistants), in human-human communication (e.g., language translation), and in machine reading and mining of large repositories of textual information. Although the field has substantially advanced due to progress in statistical learning, NLU systems are still far from reaching human performance. The primary goal of CALCULUS is to advance the state of the art of NLU

To achieve this goal, CALCULUS takes inspiration from neuroscience and cognitive science research:

it shows that humans perform language understanding tasks instantly by relying on their capability

to imagine or anticipate situations.

They have learned these imaginations – which also integrate commonsense world knowledge -

based on lifelong verbal and perceptual experiences.

Neuroscientists demonstrate the existence of modality independent conceptual representations in the brain,

apart from more concrete modality dependent and category specific representations.

Humans are extremely fast in sampling a set of these representations from the brain to parse language utterances

that best predict and explain the current language input (based on linguistic and contextual cues).

Humans also succeed in learning from analogical situations often relying on these imaginary conceptualizations.

Inspired by these models of how humans process language, CALCULUS designs, implements and evaluates

innovative machine learning paradigms for natural language understanding.

They have learned these imaginations – which also integrate commonsense world knowledge -

based on lifelong verbal and perceptual experiences.

Neuroscientists demonstrate the existence of modality independent conceptual representations in the brain,

apart from more concrete modality dependent and category specific representations.

Humans are extremely fast in sampling a set of these representations from the brain to parse language utterances

that best predict and explain the current language input (based on linguistic and contextual cues).

Humans also succeed in learning from analogical situations often relying on these imaginary conceptualizations.

Inspired by these models of how humans process language, CALCULUS designs, implements and evaluates

innovative machine learning paradigms for natural language understanding.

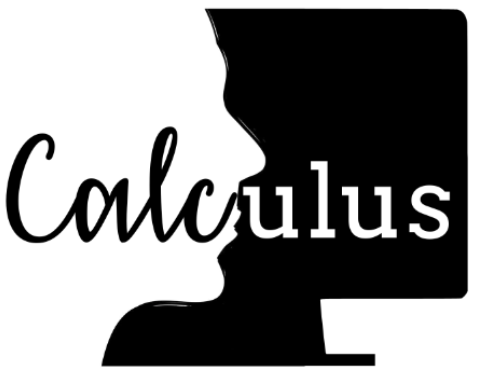

CALCULUS focuses on learning effective representations of events and their narrative structures

that are trained on language and visual data.

In this process the grammatical structure of language will be grounded in the geometric structure of visual data

while embodying aspects of commonsense world knowledge.

Continuous representations (e.g., in the form of vectors with continuous values) have already proved their success

in jointly capturing visual and verbal knowledge.

An important goal of CALCULUS is to add structure to these representations

allowing for compositionality, controllability and explainability.

CALCULUS focuses on learning effective representations of events and their narrative structures

that are trained on language and visual data.

In this process the grammatical structure of language will be grounded in the geometric structure of visual data

while embodying aspects of commonsense world knowledge.

Continuous representations (e.g., in the form of vectors with continuous values) have already proved their success

in jointly capturing visual and verbal knowledge.

An important goal of CALCULUS is to add structure to these representations

allowing for compositionality, controllability and explainability.

CALCULUS focuses on continual learning and storing, retrieving and reuse of effective representations. Current deep learning techniques need strong supervision by many annotated training examples to learn to execute a task. We humans learn from limited data and continually learn almost without forgetting what we have learned before. CALCULUS aims at developing novel algorithms for representing, retrieving and making inferences with prior knowledge, commonsense knowledge or content found earlier in a discourse.

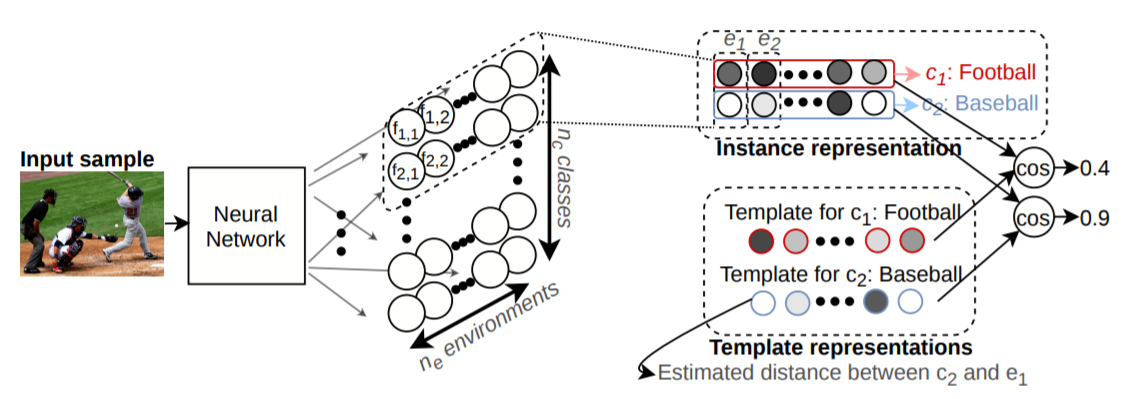

The language understanding of CALCULUS connects to the real world. Spatial language is translated

to 2D or 3D coordinates in the real world, while temporal expressions are translated to timelines.

This process integrates common sense knowledge which is learned from perceptual input such as visual data.

The best models for language understanding will be integrated in a demonstrator

that translates language to events happening in a 3-D virtual world.

The language understanding of CALCULUS connects to the real world. Spatial language is translated

to 2D or 3D coordinates in the real world, while temporal expressions are translated to timelines.

This process integrates common sense knowledge which is learned from perceptual input such as visual data.

The best models for language understanding will be integrated in a demonstrator

that translates language to events happening in a 3-D virtual world.

CALCULUS has an impact on a variety of research domains and applications beyond its goal of language understanding

and novel machine learning paradigms including robotics, human-machine interaction and artificial intelligence.